Click to zoom

Click to zoom

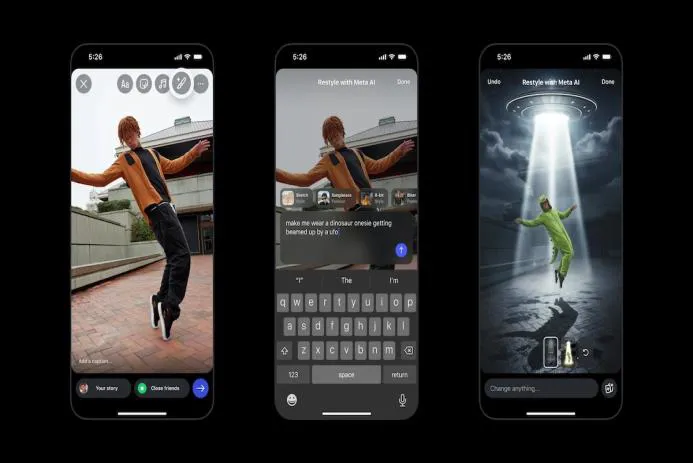

Introducing AI Magic to Your Instagram Stories

Working around social apps for the last few years, you start to recognize the moments that quietly tilt the whole landscape — and this feels like one. Meta has folded AI photo and video editing directly into Instagram Stories. Not as a separate lab experiment, but right where people already create. Think of it like a creative assistant sitting over your shoulder: fast, a little mischievous, and responsive to short text directions. Want to nudge a sky toward pink, change someone’s hair color, or reimagine an entire scene? Type it in. Watch it happen. It’s magical, but built like a tool — with a belt full of options.

Unlocking New Possibilities with Text Prompts

Meta’s smarter image tricks used to live behind the curtain — a chatbot or a separate app you had to hunt for. Now they’re baked into Stories through text prompts. You can type simple, conversational commands — “add golden hour lighting,” “make background a beach at sunset,” or “swap hair color to auburn” — and the AI will reinterpret your media. That’s prompt-driven image editing made comfortably in-app. It’s dirt-simple on the surface, but the implications are big. Creators, casual users, brands — everyone iterates faster. Sometimes the edits are uncannily good; other times you get a weirdly delightful result that makes you laugh. That unpredictability is part of the charm.

How to Use the New Editing Features

Open Stories, tap the paintbrush icon at the top, and head to the 'Restyle' menu — that’s Meta’s AI Restyle feature. Options are grouped around adding, removing, or changing elements. Want virtual sunglasses that actually sit right on the nose? Try “add vintage round sunglasses.” Curious how your photo looks as a watercolor painting? Type it in and see. From experience: short, descriptive prompts usually give the most controlled results; playful, vague prompts often produce the most interesting — if unpredictable — art. I spent an afternoon testing this with colleagues. We had instant favorites and a few that needed a second pass. Normal. Expect a little back-and-forth as you learn the tool’s voice.

Enhancing Video Content with AI

The same prompt-driven logic applies to video: add weather effects, inject dramatic lighting, or overlay realistic particles like snow or embers. I watched a short clip become a moody, cinematic slice after a couple of typed prompts — no timeline editing required. For creators obsessed with engagement metrics, these micro-enhancements for short-form video can make a Story stop-scrolling worthy. But reality check: motion makes the models work harder. Expect some artifacts in complex frames, at least early on — that’s why you’ll see questions like "why are there artifacts when I apply AI to videos?" floating around. Still — very promising for optimizing Instagram Story engagement with AI edits.

Understanding Meta's Privacy Terms

Here’s the bit that makes people pause: to use these tools you accept Meta’s AI Terms of Service. Concretely, that means Meta’s models analyze your photos and videos — summarize them, modify them, and generate new content informed by what you uploaded. From inside the industry, that trade-off is familiar: the platform needs data to improve models and to deliver personalized edits. But it’s a gray area — who owns derivatives, and how are they reused? People ask, “can Meta use my photos to train AI?” The answer is: sometimes, depending on the terms and settings. Read the fine print. I’ve been in rooms where executives argued long and hard about this: useful capabilities versus real privacy questions. No simple, one-size-fits-all answer.

Staying Ahead in the Competitive AI Market

Meta didn’t build this out of generosity. This is about staying sharp where 'AI first' features are table stakes. Remember experiments like “Write with Meta AI” or early AI-generated feeds? Those were tests. Adding prompt-driven editing in Stories creates a multi-pronged play: keep creators engaged, lock in habitual usage, and harvest signals to iterate models. Smart. Predictable, too. But the real fight won’t just be tech — it’s taste. Who can make results feel authentic and not canned? That’s where winners emerge.

Parental Controls: Addressing Safety Concerns

Meta is offering parental controls — a necessary nod, not a marketing line. Parents can disable AI interactions for teens and see oversight into AI features used on their kids’ accounts. That matters because teens are adventurous (and vulnerable); they’ll push features to see what happens. These controls don’t erase risk but signal Meta knows regulators and families will be watching. Personally, I’m glad to see the option, though I’m skeptical about how consistently families will apply it. Implementation — and some basic education — will make or break their effectiveness. People will ask, “how to disable AI features for my teen on Instagram?” — there’s an answer in the settings, but it’s worth double-checking with your family’s habits.

All told, embedding AI editing directly into Instagram Stories is a meaningful step. It lowers the barrier to creative expression, ups the novelty for users, and tightens Meta’s hold on attention. Will it be flawless on day one? No. Will it change how we make small moments feel cinematic? Absolutely. Pull up the paintbrush, type a prompt, and experiment — sometimes the best ideas come from happy accidents. If you want a deeper how-to, check our guide to AI tools for Instagram — it walks through using the Restyle menu and best text prompts for Instagram AI edits.

Thanks for reading!

If you found this article helpful, share it with others