Click to zoom

Click to zoom

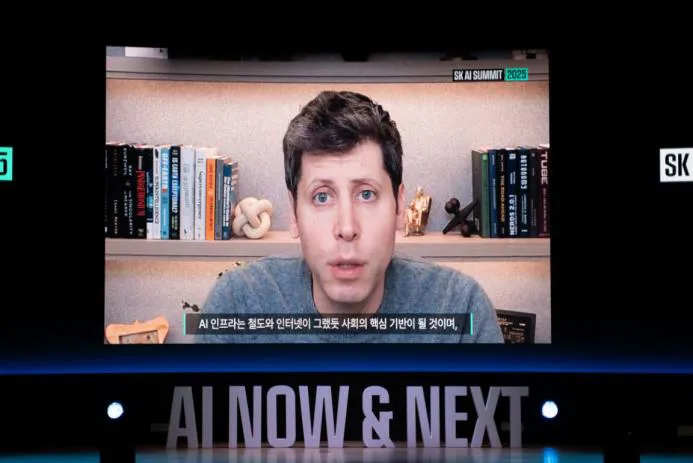

What happened: OpenAI declares a ‘code red’

Sam Altman, OpenAI’s CEO, reportedly called a “code red” — not drama for its own sake, but a deliberate refocus after Google’s Gemini 3 pulled a fast one on the market. A leaked memo, covered by The Information, says OpenAI will pause or slow some planned rollouts (ads, shopping and health AI agents, and the personal assistant Pulse) to throw more engineering weight at the ChatGPT core experience.

Why this matters: competition from Gemini

Gemini 3 — released in mid-November — has rocketed up leaderboards and into headlines. Coverage like Ars Technica and LMArena comparisons show the model hitting strong public benchmarks, and influencers (Salesforce’s Marc Benioff among them) have been openly impressed. Those public leaderboards and benchmark debates matter — perception shapes product adoption fast.

User growth and the numbers

- ChatGPT: OpenAI reports roughly 800 million weekly users (OpenAI), a staggering base but not a guarantee of immunity.

- Gemini app: Business Insider traces rapid growth — roughly 450M MAU in July to about 650M in October — and later reporting that Gemini added roughly 200 million users in a three-month window, a surge that changes the narrative (Business Insider).

What Sam Altman wants teams to do

The memo reportedly reassigns people and sets up daily coordination calls — engineers and product managers are now primarily accountable for ChatGPT performance, reliability, and short-cycle model fine-tuning. In plain terms: stop some experiments, fix the engine. That’s a classic move when a competitor posts a big performance claim — triage the experience, reduce variability, and tighten safety mitigations.

Is this a repeat of 2022’s ‘code red’?

It’s déjà vu. Back in late 2022 Google scrambled after ChatGPT’s breakout and reallocated teams. Now the script flips: Gemini 3’s rise prompts OpenAI to regroup. From my experience watching product sprints like this, these cycles are messy — technical debt surfaces quickly, but short-term focus can move perception and benchmarks almost overnight.

Financial stakes: why speed matters

This isn’t just pride. As Reuters notes, OpenAI risks spreading resources thin by juggling ambitious R&D with business deals. OpenAI’s partnerships — and public notices about investments and collaborations such as with Thrive Capital and Accenture — are real leverage points (OpenAI, OpenAI partnership).

But there’s a crunch: unlike Google’s ad-funded cushion, OpenAI’s margin story depends heavily on capital and heavy compute commitments. Reports highlighting large cloud and chip contracts and a high fixed-cost base make the company’s urgency understandable (Fortune).

Product roadmap: what’s being delayed—and what’s coming

- Delayed: ad integrations, shopping and health AI agents, and the personal assistant Pulse — yes, Pulse may slip while core work happens.

- Continued focus: tightening ChatGPT’s core: performance tuning, safety mitigations, and more robust reliability testing.

- Upcoming: the memo teases a simulated reasoning model due soon — could be the tactical counter to Gemini 3’s benchmark wins.

Industry reaction and perspective

Reactions are mixed. Some say the memo is strategic noise — positioning to slow PR momentum for Gemini. Others accept a simple truth: competition accelerates innovation. Picture a fintech shop seeing two big providers sprinting; they can piggyback on rapid infrastructure improvements, but they also inherit a higher bar for reliability and compliance. Faster cycles help startups in some ways — and make life harder in others.

Key takeaways

- OpenAI is prioritizing ChatGPT improvements over short-term feature rollouts in response to Gemini 3 user surge 2025.

- Competition is intensifying: Gemini’s rapid user growth has reshaped the AI narrative and triggered a resource reallocation at OpenAI.

- Money and compute matter: OpenAI’s cloud and chip commitments create strategic urgency and constrain slack.

- Fast cycles, higher risk: quick sprints can move metrics and perception, but increase the need for safety, testing, and monitoring.

Further reading and sources

For readers who want to dig deeper, these pieces informed this write-up:

- The Information: OpenAI ‘code red’ report

- Ars Technica: Gemini 3 coverage

- Reuters Breakingviews column

- Fortune analysis of OpenAI’s finances

- Business Insider: user-growth reporting

Learn more in our guide to AI chips and infrastructure and explore how large-scale promotions and distribution can drive rapid user adoption.

Thanks for reading!

If you found this article helpful, share it with others