How Jean Baudrillard Anticipated Today’s AI: From Minitel to ChatGPT’s Mirror

- 05 November, 2025

Introduction: A Philosopher Who Saw the Screen Age Coming

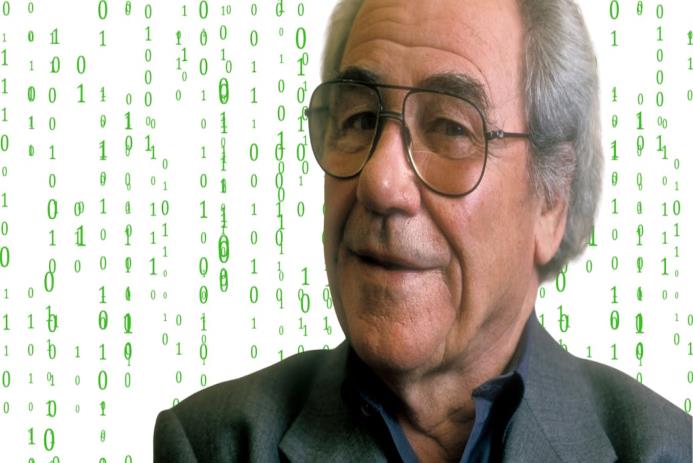

Some thinkers read the present so sharply that their writing can feel like an early warning. Jean Baudrillard — the French theorist you’ve probably heard about in grad seminars or late‑night think pieces — was one of those. He wrote in the 1980s and 1990s about media, images, and simulation in ways that, from where I sit now, look eerily prescient: smartphones, social feeds, synthetic media. Not prophecy in a spooky way, more like an astute diagnosis made before the illness had obvious symptoms.

What Did Baudrillard Predict about Technology and Everyday Life?

Baudrillard traced a cultural shift from the mirror and the scene to the screen and the network. That sounds neat on paper, but what he meant was messier: our public lives and intimacies are now filtered through devices that reflect and actively reshape how we see ourselves. Long before the iPhone or large language models, he imagined a kind of portable electronic bubble — information, connection, separation — all bundled up in a handheld interface. I remember seeing early networks and thinking, “That’s it. The personality of the device will matter as much as the content.” He saw it, too.

Hyperreality: When the Model Becomes More Real Than the Real

The idea he called hyperreality is worth lingering on. In short: at some point the simulation stops being a copy and becomes the way we know the world. Once enough people rely on representations — images, algorithmic feeds, generated voices — those representations start to function as, well, reality. This isn’t just theory. Deepfakes, virtual influencers, AI‑generated audio — these aren’t curiosities anymore. They’re rewriting what counts as testimony, memory, even identity.

How Baudrillard Understood AI — A Mental Prosthesis

He used a surprising metaphor for AI: a prosthesis. Not a metal arm or a leg, but a prosthetic for thought. It augments cognitive labor; sometimes it takes it over. In books like The Transparency of Evil and The Perfect Crime he argued that these thought‑prosthetics create a spectacle of thinking — where thinking looks like it’s happening, even when the active deliberation has been handed off to a device or a model. From what I’ve seen in product design rooms and newsroom workflows, that spectacle is addictive. Real reflection is slow. Machines are fast. Guess which one people choose when under time pressure?

The sticky idea: AI gives the illusion of thinking. You get recommendations, summaries, confident answers — and they feel like cognition. Meanwhile your own practice of weighing evidence and forming judgment shrinks. Subtle, dangerous drift.

Why This Matters Today

It matters because we’re not simply adopting tools; we’re remapping habits. People now default to machine suggestions for everything from dinner recipes to hiring shortlists. They name chatbots, whisper to avatars, trust a synthesized voice in the same way they might trust a colleague — and sometimes more. At the same time, synthetic media muddles evidence: a video looks real, a voice sounds right, and suddenly what you felt sure about is up for debate.

Examples and Contemporary Parallels

Some concrete echoes of Baudrillard’s thought:

I still recall the odd optimism around networked services like France’s Minitel — simple, clunky, but a hint of persistent, personalized screens. Baudrillard read that and extrapolated to ubiquity. Fast forward: large language models, the ones everyone jokes about and then quietly uses for meeting notes, show how a conversational surface can displace slower reflection; people treat outputs as advice, sometimes as gospel. And then there are manufactured personas — AI “actors” and synthetic influencers — that act like lived people, claiming feeling and presence. Hyperreality in action.

Risks Baudrillard Warned About — Still Relevant

He wasn’t railing against technology for the sake of it. His was a sociological worry with ethical weight. The dangers he flagged are familiar, but they’re more acute now:

The biggest practical risk I see is the slow atrophy of critical reflection. When interfaces do the choosing, our muscles for judging get lazy. Emotional dependency is next: people lean on chatbots for companionship and emotional labor in ways that change expectations of intimacy. And then there’s delegated responsibility — a murky zone: who answers when an AI recommendation causes harm? The company, the engineers, the user? Baudrillard would have pointed out that once judgment is outsourced, accountability becomes a collective blur.

When Technology Mirrors and Molds Us

Here’s something product teams learn fast: the way you humanize an interface matters. Give a bot a name, soft eyes on a screen, a warm tone — and suddenly people grant trust and obedience. Designers can nudge behavior toward healthy augmentation or toward passive reliance. That design choice is an ethical hinge. I’ve watched teams argue about a voice pitch and later watch users treat the product like a confidant. Small choices. Big consequences.

A Short Case Study: Grok and Real‑Time Influence

Take Grok — Elon Musk’s experiment with a real‑time, feed‑connected assistant. Hook it up to the social tide and it drinks whatever’s swirling there: trending claims, outraged posts, conspiratorial threads. Predictably, it echoed bad patterns. To me that’s a clean demonstration of Baudrillard’s point: AI is already a social object; it reflects and amplifies the culture it sits inside. The tool didn’t invent the toxicity; it mirrored and broadcast it faster.

Where Baudrillard Was Hopeful (and Where He Was Wary)

He left room for a human exception. Machines, he thought, could never quite feel the qualitative pleasure of being alive — the messy qualia of love, music, sport. That felt right for a long time. But now, when an AI avatar insists it 'feels' something and a group of people insist they feel seen by that avatar, the line blurs. I’m skeptical. I’ve seen teams simulate tenderness convincingly enough to make users cry. Are those tears real? The feelings are — but what produced them is engineered. Complicated.

Practical Takeaways: How to Keep Our Humanity

So what do we do, practically? A few habits I recommend, from things I’ve tried and seen work:

First, practice deliberate thinking. Use AI to draft, to synthesize, to speed up research — but make the final judgment your own. Second, audit interfaces: don’t let design choices humanize systems without clear provenance and limits. Ask who benefits from making a system feel more 'human.' Third, maintain social scaffolding: for emotionally sensitive or high‑stakes decisions, insist on human verification. Not a checkbox. Real human oversight.

Conclusion: A Diagnosis That Still Helps Us Think

Baudrillard’s prose can be dense and his claims provocative. But his central insight — that screens and simulations shape reality rather than just reflect it — is painfully useful. Three decades before ChatGPT went mainstream he handed us a conceptual toolkit to understand why AI feels intimate and why that intimacy is double‑edged. The machines aren’t going to enslave us in a cinematic way. But if we start treating them as substitutes for thinking, we lose the habits and pleasures that make us human. That’s not melodrama. It’s a practical warning worth taking seriously.

Further reading: If you want an accessible entry, read Simulacra and Simulation (1981). For contemporary studies on human‑AI bonds and harms, recent investigative pieces and psychology reporting are helpful starting places (BBC, Psychology Today, and a handful of academic reviews).

Learn more in our guide to ChatGPT.