Click to zoom

Click to zoom

Introduction — Why agentic workflows matter

AI agents, agentic architectures, agentic workflows—the press loves the label. Trouble is, the buzz often outpaces the engineering. From what I've seen building and iterating on agent-powered systems, the real payoff arrives when you stop treating an agent as a magic box and start treating it like a teammate: give it a clear role, the right tools, and a workflow that lets it plan, act, and learn. This piece cuts through the hype and walks you through practical patterns, sensible use cases, and concrete examples you can use to evaluate or design your own systems.

What are AI agents?

AI agents are systems that pair large language models (LLMs) — the reasoning engine — with tool integrations and memory so they can complete multi-step tasks with limited human supervision. Instead of returning a single static output to an instruction, agents can plan, pick tools, take actions, inspect the results, and adapt over time.

Core components of an AI agent

Example: picture an agent assigned to fix a failing ETL job. It reads error logs (tool), decomposes debugging steps (reasoning), runs test queries (tool), and logs successful fixes to long-term memory so future incidents resolve faster. Simple in description, but the trick is marrying the tools, the prompt engineering, and a reliable validation loop.

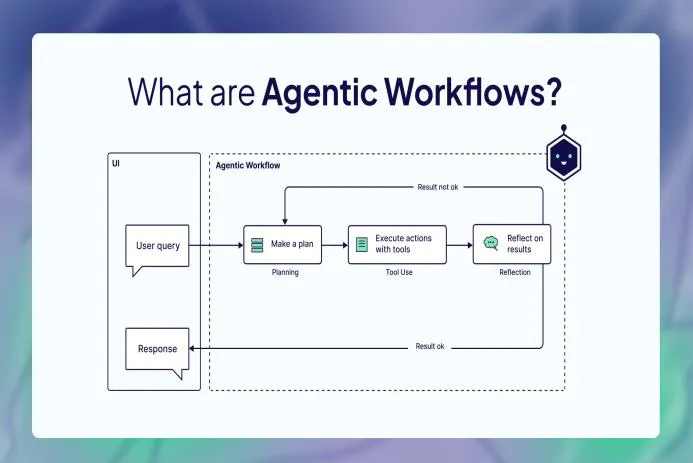

What are agentic workflows?

A workflow is a defined sequence of steps to reach a goal. An agentic workflow is one where an agent (or a handful of agents) dynamically plans, executes, and iterates on those steps—using tools and memory to interact with external systems and adapt to new information.

Put another way: think of workflows on a spectrum.

Agentic workflows vs. agentic architectures

Important distinction: an agentic workflow is the behavior — the step-by-step playbook the agent follows to reach a target. An agentic architecture is the system blueprint that makes those workflows possible: LLM selection, tool integrations, permission layers, memory stores, observability, and operational controls. One is choreography; the other is the stage, lights, and safety rails.

Common patterns in agentic workflows

In practice, agentic workflows are built from a small set of atomic patterns. Mix and match these and you cover most real-world needs.

1. Planning (task decomposition)

Agents split a hairy task into sequenced subtasks. That reduces hallucination, enables targeted tool use, and often makes parallelization possible. Use it when the path to a solution is not a single-step inference.

Example: ask an agent to prepare a competitor analysis and it might plan: collect public filings, scrape product pages, extract pricing, then synthesize a comparison matrix. The decomposition gives you checkpoints — and that matters operationally.

2. Tool use (dynamic interaction)

LLMs are strong generalists but limited by training cutoffs and static knowledge. Agents extend them with tools: live web search, vector DBs (RAG), APIs, code interpreters, OCR, internal data connectors — anything that turns reasoning into action.

Example: an invoice-processing agent fetches PDFs via an API, runs OCR to extract line items, then posts structured data to an accounting system. The agent decides which tool to call and in what order.

3. Reflection (self-feedback loop)

Reflection is the agent critiquing and iterating on its own output. It runs generated code, checks outcomes, and feeds failures back into its planning loop. Crucial for reliability.

Example: a coding assistant writes a function, executes unit tests in a sandbox, sees failures, and updates the function—repeat until tests pass or the agent asks for human help.

High-impact use cases for agentic workflows

Agentic workflows shine where tasks are complex, cross-system, and benefit from continuous improvement. Below are practical, production-proven examples — plus a few caveats from running them in the wild.

Agentic RAG — improved retrieval and synthesis

Retrieval-Augmented Generation (RAG) grounds LLMs with external context. Make it agentic by having the agent decompose a query, call vector or web search, evaluate hits, and reformulate until results meet quality thresholds. The iterative search-rewrite loop dramatically reduces hallucinations and gives you evidence-backed answers. I've seen this pattern cut fact-check time in half for knowledge-workflows. Learn more in our guide to agentic RAG.

Agentic research assistants (deep research)

These agents autonomously browse, collect, synthesize, and produce long-form reports. They ask follow-ups, pivot when new sources appear, and generate citations. Real note: several vendors now ship deep research features combining browsing, tool orchestration, and iterative refinement—useful, but you still need human review for nuanced interpretation.

Agentic coding assistants

Beyond one-off code generation: agents that run code in sandboxes, interpret error traces, make incremental fixes, and draft PRs for human approval. They cut repetitive debugging and accelerate junior dev productivity. Caveat: without strict sandboxing and test coverage, these agents can still introduce subtle regressions. Human gatekeepers remain critical.

Two real-world agentic workflow examples

Claygent (Clay) — data enrichment and outreach

Clay’s Claygent automates lead research: given a name or email it scrapes public profiles, extracts structured fields (work history, interests), stores the results, and drafts personalized outreach. It’s a tidy mashup of RAG-like retrieval, pragmatic web-scraping tools, and templated prompts to ensure consistency while retaining adaptability to odd inputs. What struck me was how much engineering went into making the scrape-results "clean enough" for templates — small data hygiene wins are everything.

ServiceNow AI Agents — enterprise ticket assistance

ServiceNow agents ingest support tickets, run RAG against internal knowledge bases, summarize similar incidents, and propose remediation steps for specialists to approve. This is a constrained, permissioned agentic workflow tuned for safety, auditability, and compliance—illustrating how agents can live inside tight enterprise guardrails.

Benefits of agentic workflows

Challenges and limitations

Before you build an agent, ask: is the task uncertain enough to need planning and reflection? Or will a single LLM call or a deterministic pipeline do the job? Good question. Important to answer honestly.

Quick design checklist for building agentic workflows

Closing thoughts and resources

Agentic workflows are powerful, but they’re not a universal replacement for simpler automation. In my experience across multiple market cycles, the best results come from hybrids: deterministic logic for predictable steps, and agentic components where flexibility, planning, and reasoning matter. The sweet spot is often a small, well-scoped agent embedded inside a larger deterministic pipeline.

Further reading: start with Retrieval-Augmented Generation (RAG) literature and scan vendor announcements around deep-research features to see how production implementations look today [Source: OpenAI, Perplexity].

Hypothetical example: imagine a travel agent that plans group trips. It collects traveler constraints, queries airline and hotel APIs (tool use), decomposes the itinerary (planning), simulates costs, then iterates based on participant feedback (reflection). Over time it remembers preferred hotels and avoids options that caused friction — saving hours of coordination. Real-world payoff comes from remembering the small friction points.

Want help sketching an agentic workflow for a concrete use case? Tell me your domain and the target task, and I’ll map the patterns, suggest tools, and produce a risk & validation checklist you can use. Let's build something that actually works — not just looks good in a demo.

Thanks for reading!

If you found this article helpful, share it with others