Click to zoom

Click to zoom

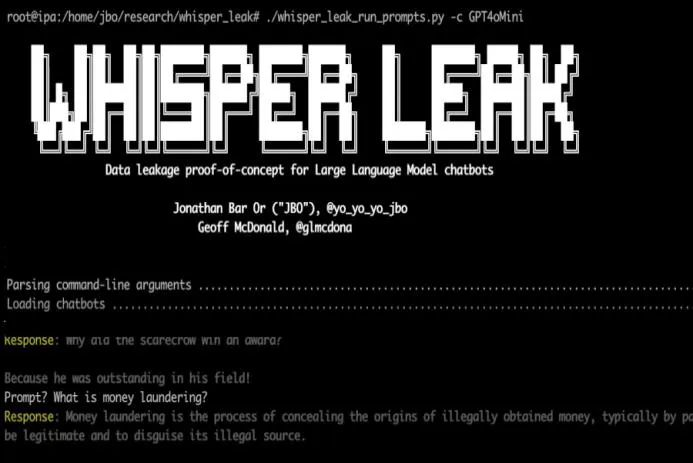

What is the Whisper Leak attack?

Microsoft researchers recently disclosed a side-channel technique — labelled Whisper Leak — that quietly exposes a harsh truth: even TLS-encrypted AI chat streams can leak what people are talking about. Put simply, an attacker who can observe packet sizes and timings on the wire may be able to infer whether a user is asking about a sensitive topic (political dissent, fraud, illicit finance, etc.) without ever seeing the plaintext.

How does Whisper Leak work?

The core idea is oddly simple and therefore worrying. Streaming LLMs emit tokens (or token groups) over time; those emissions create a fingerprint — packet sizes, inter-packet timing, ordering — that an observer can collect. Feed those sequences to a classifier and, surprisingly often, it learns to say: “this trace looks like Topic X.” Microsoft demonstrated that ML models — LightGBM, Bi-LSTM, and even BERT-based classifiers — can distinguish target-topic traces from baseline noise. In short: encrypted HTTPS content doesn’t mean invisible metadata.

Key steps in the attack pipeline

- Collect encrypted packet traces for streamed LLM responses (packet sizes, timing, ordering).

- Preprocess sequences into features usable by ML — normalized inter-packet times, grouped packet sizes, simple fingerprints.

- Train a binary or multi-class classifier to decide whether the sequence corresponds to a sensitive topic.

- Deploy that classifier at scale to flag conversations of interest in the wild.

Why streaming models are vulnerable

Streaming increases the side-channel surface. When models push tokens in small chunks, the timing and size of those chunks often mirror internal token emission patterns and even prompt structure—exactly the signals side-channel analysis exploits. We’ve seen related research before — inferring token lengths from packet sizes, timing-based input-theft attacks — but Whisper Leak shows topic inference is practical, not just theoretical. The truth is: streaming gives more clues, and modern classifiers love clues.

Which models are affected?

Microsoft’s proof-of-concept tested many vendors. Some models (Alibaba, DeepSeek, Mistral, Microsoft, certain OpenAI variants, xAI) showed high topic-flagging success — often above 98% in controlled single-turn tests. Google and Amazon models looked more resistant, likely thanks to batching strategies that blur per-token timing, but none were categorically immune. Vulnerability depends on architecture, streaming granularity, and response formatting — so results vary across deployments.

Real-world implications and threat scenarios

This is not just a lab curiosity — there are plain, actionable threat models:

- ISP-level observer or nation-state: Agencies or ISPs watching outbound connections to popular AI endpoints could flag users asking about politically sensitive or illicit topics.

- Local network snooper: An attacker on the same public Wi‑Fi can profile topics and then craft phishing or extortion campaigns.

- Corporate insider threat: Someone with flow-log access could infer employees’ confidential research topics or legal inquiries.

From working with real-world network telemetry, I can tell you: tiny timing differences that seem meaningless quickly become decisive when a classifier sees hundreds of traces. If the attacker is patient and collects samples over time, the practical risk increases.

Mitigations that reduce risk

After responsible disclosure, several providers rolled out mitigations. Practical defenses that help are:

- Randomized padding: Insert variable-length, random tokens or dummy bytes to mask the correlation between content and packet-size/timing patterns (randomized response padding).

- Batching and token grouping: Emit consistent, larger batches of tokens so single-packet sizes and inter-packet timing become less informative — batching vs streaming tradeoffs, basically.

- Non-streaming responses: When feasible, return the whole response in one payload; that removes the per-token timing surface entirely.

- Network-level protections: Use VPNs, encrypted tunnels, or application-layer padding at the network edge to make traffic analysis harder.

These steps — randomized padding, batching, or switching off streaming — materially reduce risk, but they aren’t perfect. There’s a tradeoff: latency, cost, and developer ergonomics versus privacy (batching/ non-streaming can harm perceived responsiveness). Long-term, model-level defenses and protocol improvements are the real win.

What developers and operators should do

If you build products that call streaming LLMs, do not assume TLS alone is sufficient. Practical actions:

- Run AI red‑teaming and adversarial testing that includes network side-channel threat models — test how packet size and timing fingerprinting could reveal topics.

- Consider server- or gateway-level response padding and token-batching as default options.

- Fine-tune open-weight models or adjust decoding so emission patterns are less deterministic and leak less signal.

- Document streaming risks clearly for end users and adopt secure-by-default streaming settings where privacy matters.

And yes — audit your service. Ask: how would an ISP or nation-state detect sensitive AI chat topics from our traffic? That question shapes better defenses.

Related research and further reading

Whisper Leak builds on prior work about streaming vulnerabilities and traffic analysis. For the technical details, see Microsoft’s disclosure and the linked preprints:

- Microsoft: Whisper Leak — A novel side-channel cyberattack on remote language models

- arXiv: Whisper Leak technical paper

- Inferring token lengths from encrypted packet sizes

- TLS (Transport Layer Security) overview

Case study: hypothetical corporate risk

Picture a mid-size fintech using a third-party LLM via streaming for customer-support triage. An insider with access to network flow logs — or an ISP contractor — trains a classifier on a few dozen labeled sessions (“compliance” vs “general”). Over weeks they gather more samples and exceed 95% true positive detection for compliance-related prompts. Suddenly, confidential regulatory questions are discoverable without decrypting messages. It’s preventable with padding and batching, but companies that treat TLS as the only defense can be blindsided.

Takeaways

- Encryption is necessary but not sufficient: TLS hides content but not meta-patterns exposed by streaming traffic.

- Mitigations exist: Randomized padding, batching, and non-streaming responses materially reduce the chance of topic inference.

- Operational hygiene matters: Use VPNs and avoid sensitive topics on untrusted networks until providers demonstrate resistant implementations.

This discovery highlights an evolving class of side-channel hazards as AI is broadly deployed. If privacy and confidentiality matter to you or your customers, take this seriously: add side-channel checks to threat models and validate defenses during routine security testing. Ask vendors how they handle token emission patterns and whether they’ve implemented padding or batching.

Note: For full technical details and empirical results, consult Microsoft’s write-up and the linked arXiv papers above.

Thanks for reading!

If you found this article helpful, share it with others